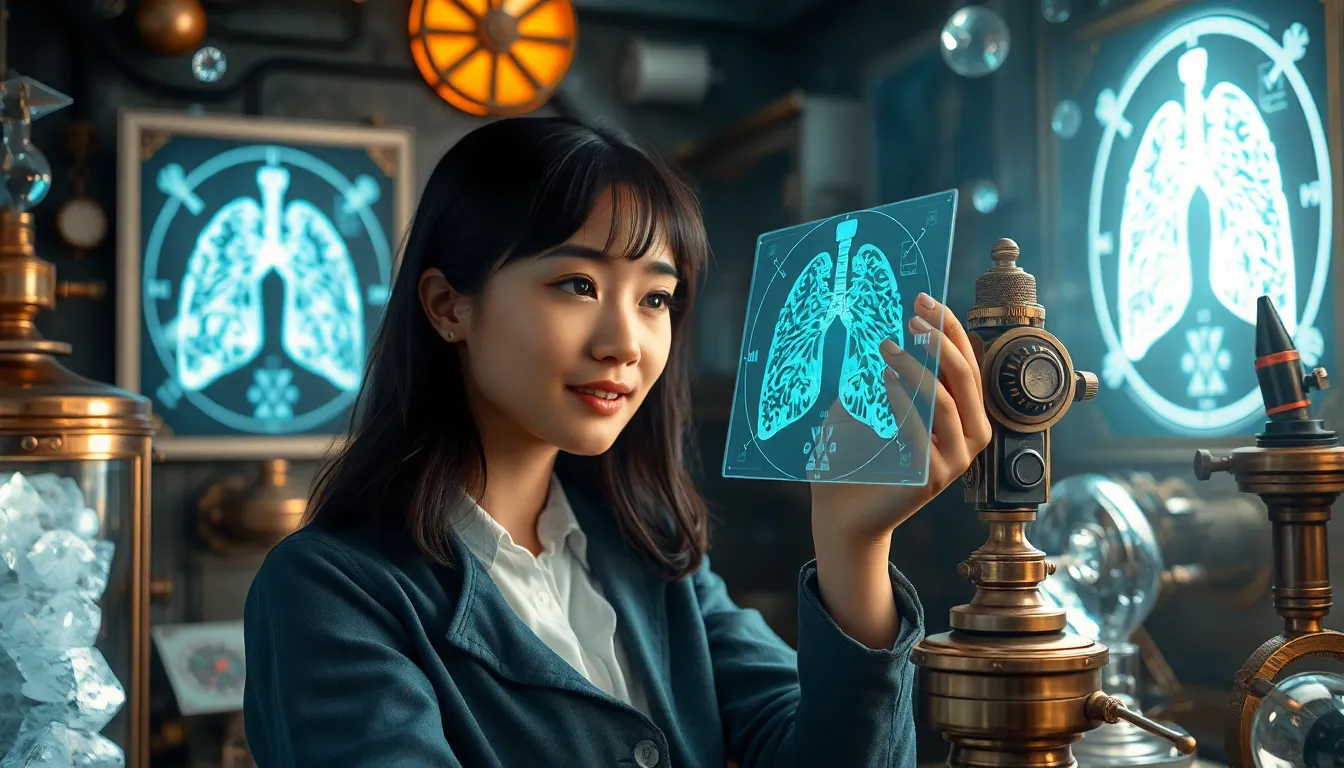

Computer Vision in Healthcare: Detecting Diseases from Scans

Ever wonder how doctors are starting to use computers to see things in medical images that maybe, just maybe, humans might miss? It’s not about replacing doctors- not even close. It’s more about giving them a super-powered assistant- one that can look at hundreds, even thousands, of scans and pick out tiny details that could be signs of disease. This is where computer vision comes in- specifically in the field of healthcare, and more specifically, detecting diseases from scans. It sounds like science fiction, and honestly, parts of it still feel that way. But the progress? It’s been pretty amazing lately.

What Exactly is Computer Vision in Medical Imaging?

Okay, so “computer vision.” It’s a broad term, right? It basically means teaching computers to “see” and interpret images like we do. But with medical imaging, we’re not talking about identifying cats in photos (though that’s also computer vision). We’re talking about analyzing X-rays, MRIs, CT scans, ultrasounds- the whole shebang. The goal? To find patterns and anomalies that could indicate a problem- a tumor, a fracture, signs of a stroke, you name it. It’s like giving the computer a really, really good pair of eyes- and the ability to remember every scan it’s ever seen. This kind of stuff helps with early detection and diagnosis- really critical for better patient outcomes. Anyway- what matters is that the computer isn’t just looking- it’s learning.

How it Works (Sort Of) – The Basics of the Tech

How do you even start teaching a computer to “see” a tumor? Well, the basic idea is this: you feed it tons and tons of images- some with the disease, some without. And you tell the computer, “Okay, these are examples of cancer,” or “These are normal lungs.” The computer then uses algorithms- fancy math- to learn the differences between the two. These algorithms often involve something called “deep learning,” which is a type of machine learning that uses artificial neural networks- sort of modeled after how our brains work. It’s complicated stuff, honestly. One of the most common approaches is using convolutional neural networks (CNNs). CNNs are particularly good at recognizing patterns in images. So, it’s like the computer is learning to spot the visual signatures of disease. You’ll find tools like TensorFlow and PyTorch are commonly used for this. Getting started involves a good understanding of Python (the programming language) and the libraries specific to computer vision.

What People Get Wrong (and Where it Gets Tricky)

One big misunderstanding is that computer vision is a magic bullet. It’s not. It’s a tool, and like any tool, it has limitations. If the training data- the images you use to teach the computer- isn’t good enough, or if it’s biased in some way (say, it only includes images from one type of scanner), then the computer’s “vision” will be flawed. Another thing is that even the best computer vision system isn’t perfect. It can make mistakes- false positives (saying there’s a disease when there isn’t) and false negatives (missing a disease that’s there). So, it always needs a human expert- a radiologist- to interpret the results and make the final diagnosis. Think of the computer as a very skilled assistant, not a replacement. The tricky part is getting the computer to generalize well. What do I mean? Well, the computer might be great at detecting lung nodules in a CT scan from one hospital, but might struggle with scans from a different machine or with slightly different image settings. That’s why you need lots of data, from different sources, to train a robust system.

Small Wins That Build Momentum

The good news is, there are small wins that build momentum. For instance, a radiologist might spend a lot of time just counting things- like the number of lesions on a scan. Computer vision can automate that, freeing up the radiologist to focus on the more complex aspects of diagnosis. Another win is in triaging scans. Imagine a hospital where a computer vision system automatically flags scans that are likely to show a serious problem, so those scans can be prioritized for review. This can speed up diagnosis and treatment, especially in emergency situations. Honestly- early detection of strokes, aneurysms, and internal bleeds can save lives. And yeah… these early wins kind of prove the concept and make everyone more willing to invest in the tech and gather even more data.

Specific Applications: From Lungs to Brains (and Beyond)

Okay, so we’ve talked about the general idea. But where is this actually being used? Turns out, quite a few places! We’re seeing computer vision make inroads in detecting all sorts of diseases, using different imaging techniques. Let’s look at a few examples. One of the really exciting areas is lung cancer detection- early lung cancer is often treatable, but it’s also easy to miss in early stages. But it’s not just lung cancer- computer vision is also being applied to breast cancer, brain tumors, and even things like diabetic retinopathy (an eye disease). The variety is kind of staggering- but each requires a slightly different “eye” from the computer.

Lung Cancer Detection: A Major Focus

Lung cancer screening is a big deal. It involves using low-dose CT scans to look for small nodules in the lungs, which could be early signs of cancer. The problem is that radiologists have to look at a ton of images- and most of those nodules will turn out to be benign (not cancerous). It’s a time-consuming and tiring job- and honestly, humans get fatigued and can miss things. Computer vision systems can help by pre-screening the scans and flagging the ones that are most likely to contain suspicious nodules. This doesn’t replace the radiologist- it just makes their job more efficient. They can focus their attention on the scans that really need it. The computer can even measure the size and density of the nodules over time, which is important for tracking their growth. The real challenge here is reducing false positives- you don’t want to put someone through unnecessary anxiety and further testing if the nodule is benign. So, the algorithms have to be really, really good- and that means lots of training data.

Breast Cancer Screening: Mammograms and Beyond

Breast cancer screening is another area where computer vision is making a difference. Mammography is the standard screening method, but it’s not perfect. False positives and false negatives are a concern. Computer vision can help radiologists by highlighting areas of interest on mammograms- microcalcifications, masses, anything that looks suspicious. It can also be used to analyze other types of breast imaging, like ultrasounds and MRIs. One interesting development is the use of computer vision to assess breast density, which is a risk factor for breast cancer. Dense breasts can make it harder to spot tumors on mammograms- so having a computer provide an objective measure of density could be really helpful. This is an area where we are going to see some big strides in the next few years- I would bet my coffee on it.

Neurological Applications: Brain Scans and Stroke Detection

The brain is complex, and brain scans can be complex to interpret. Computer vision is being used to detect a range of neurological conditions, from strokes and aneurysms to brain tumors and Alzheimer’s disease. In stroke detection, time is critical. The faster you can diagnose a stroke and start treatment, the better the outcome. Computer vision can help radiologists quickly identify signs of a stroke on CT scans, such as bleeding or blood clots. This can speed up the triage process and ensure that patients get the treatment they need as soon as possible. In Alzheimer’s disease, computer vision can be used to measure the volume of different brain regions, which can help with diagnosis and tracking the progression of the disease. And with brain tumors- it’s a lot like lung nodules- finding the boundaries and changes over time can be made much more consistent with a little help from computers. Honestly- the applications are pretty wide-ranging.

The Challenges: Data, Ethics, and Implementation

Alright- so this all sounds promising, right? But there are challenges- significant ones. It’s not just about the technology itself. It’s also about data- getting enough of it, making sure it’s high-quality, and protecting patient privacy. And then there are the ethical questions- who’s responsible when a computer makes a mistake? How do we avoid bias in algorithms? And finally, there’s the practical challenge of getting these systems into hospitals and clinics and making sure they’re used effectively. Think about it- even the coolest technology is useless if doctors don’t trust it or can’t use it easily. It’s a whole system that needs to work together, not just a clever algorithm.

The Data Problem: Quantity, Quality, and Privacy

Computer vision algorithms are data-hungry. They need thousands, even millions, of images to learn effectively. And not just any images- high-quality, well-labeled images. That means someone- usually a radiologist- has to spend time annotating the images, marking the location of tumors, fractures, or other abnormalities. This is a huge task, and it’s expensive. Data privacy is also a major concern. Medical images contain sensitive patient information, so it’s crucial to protect that data. This often involves anonymizing the images- removing any identifying information- before they’re used for training algorithms. And then there’s the issue of data sharing. Different hospitals and institutions have their own data, and it can be difficult to share it due to privacy regulations and competitive concerns. So, there’s a real need for collaboration and data-sharing initiatives to make these algorithms better. It’s sort of a communal effort when you think about it- we need to pool resources to make it work.

Ethical Considerations: Bias and Accountability

Bias in algorithms is a big worry in any field- and it’s especially important in healthcare. If the training data is biased- say, it only includes images from one demographic group- the algorithm might not perform well on other groups. This could lead to disparities in care. For example, if a lung cancer detection algorithm is trained primarily on images from smokers, it might not be as accurate in detecting lung cancer in non-smokers. Accountability is another tricky issue. If a computer vision system makes a mistake, who’s responsible? The doctor? The hospital? The company that developed the algorithm? It’s not always clear-cut. This is why it’s so important to have human oversight- radiologists need to understand the limitations of these systems and use their own judgment to interpret the results. It’s a collaboration- not a replacement- and that really matters.

Implementation Challenges: Trust and Workflow

Even with the best algorithms and the most ethical intentions, there’s still the challenge of getting these systems into clinical practice. Doctors need to trust the technology, and it needs to fit seamlessly into their workflow. If a computer vision system is difficult to use or slows them down, they’re not going to use it. That’s just human nature. That means the user interface has to be intuitive and the system has to be reliable. There’s also the issue of training. Radiologists need to be trained on how to use these systems and how to interpret the results. And hospitals need to invest in the necessary infrastructure- the hardware, the software, the IT support. Honestly- there’s a lot that goes into it- it’s not just plugging in a new program and expecting miracles.

FAQs About Computer Vision and Medical Scans

How accurate is computer vision for detecting diseases compared to human radiologists?

That’s a great question- and the honest answer is that it depends. Computer vision systems can be very accurate in specific tasks, sometimes even exceeding human performance in tasks like detecting small nodules in lung scans. However, they’re not perfect, and they’re definitely not a replacement for human radiologists. Instead, think of them as a second pair of eyes- a tool that can help radiologists make better and faster diagnoses. It’s really about augmenting human skills, not replacing them.

What types of medical scans can computer vision analyze, and what diseases can it help detect?

Computer vision is pretty versatile. It can analyze a wide range of medical scans, including X-rays, CT scans, MRIs, and ultrasounds. It’s being used to detect all sorts of diseases- lung cancer, breast cancer, brain tumors, strokes, Alzheimer’s disease, and even eye diseases like diabetic retinopathy. The possibilities are really expanding as the technology improves and we gather more data. And with each scan type- a new set of things can be noticed- things that were hard to see before.

How does computer vision handle false positives and false negatives in disease detection?

False positives (saying there’s a disease when there isn’t) and false negatives (missing a disease that’s there) are a big concern in any medical test- including computer vision. The goal is to minimize both. Computer vision systems are trained to be as accurate as possible, but they’re not perfect. That’s why it’s crucial to have human radiologists review the results and make the final diagnosis. And to be fair- the better the algorithms get- the fewer mistakes they make- but human oversight is still vital.

How is patient data protected when using computer vision for medical imaging analysis?

Patient data privacy is a top priority. Medical images contain sensitive information- so it’s really important to protect it. This is typically done by anonymizing the images- removing any identifying information- before they’re used for training algorithms or for analysis. Hospitals and research institutions also have strict protocols in place to ensure data security. There are regulations like HIPAA (in the US) that dictate how medical information can be used and shared. So- it’s a complex dance- but a necessary one.

Conclusion: The Future is Looking… Clear?

So, where does all this leave us? Computer vision in healthcare is a big deal. It’s not a perfect fix- and it’s definitely not going to replace doctors. What it *is*, though, is a powerful tool that can help improve the accuracy and efficiency of disease detection. It’s like giving doctors a magnifying glass that can see things they might otherwise miss. The progress has been impressive- honestly- but there are still challenges to overcome. We need more data, we need to address ethical concerns, and we need to make sure these systems are implemented in a way that works for both doctors and patients. One thing I learned the hard way? Don’t underestimate the importance of getting the workflow right. You can have the best algorithm in the world- but if it’s clunky to use, people just won’t. It really comes down to collaboration between tech people and medical professionals- understanding each other’s worlds. Ultimately- the goal is to make healthcare better- more accurate, more efficient, and more accessible. Computer vision is definitely a step in that direction, and it will be fascinating to see where it goes from here. The potential is there- it’s just about making it real, making it safe, and making it work for everyone.